Poker Neural Network Example

By Dr. Michael J. Garbade

The computer looked up two copies of the same network in its neural network, namely for the first three shared cards and then again for the final two, trained on 10,000 randomly drawn poker games. Neural Networks. Artificial neural networks are computational models inspired by biological nervous systems, capable of approximating functions that depend on a large number of inputs. A network is defined by a connectivity structure and a set of weights between interconnected processing units ('neurons').

Neural networks (NN), also called artificial neural networks (ANN) are a subset of learning algorithms within the machine learning field that are loosely based on the concept of biological neural networks.

Andrey Bulezyuk, who is a German-based machine learning specialist with more than five years of experience, says that “neural networks are revolutionizing machine learning because they are capable of efficiently modeling sophisticated abstractions across an extensive range of disciplines and industries.”

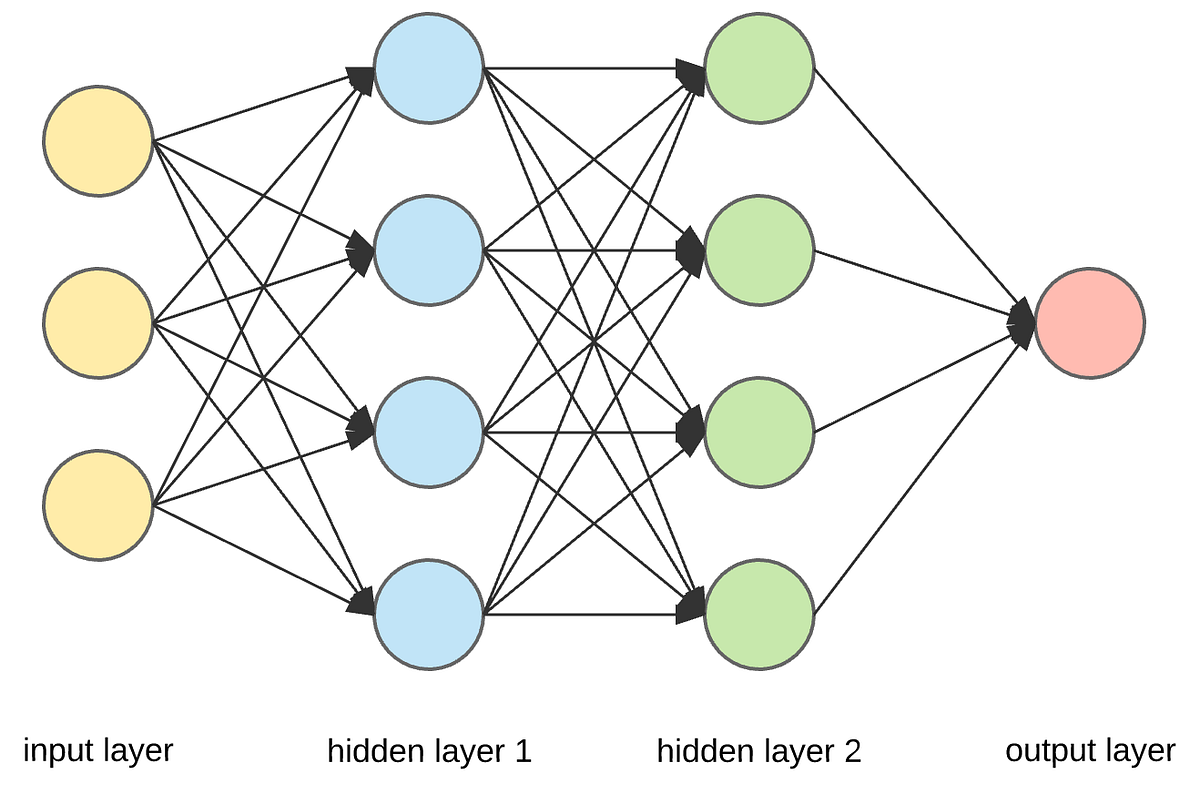

Basically, an ANN comprises of the following components:

- An input layer that receives data and pass it on

- A hidden layer

- An output layer

- Weights between the layers

- A deliberate activation function for every hidden layer. In this simple neural network Python tutorial, we’ll employ the Sigmoid activation function.

There are several types of neural networks. In this project, we are going to create the feed-forward or perception neural networks. This type of ANN relays data directly from the front to the back.

Training the feed-forward neurons often need back-propagation, which provides the network with corresponding set of inputs and outputs. When the input data is transmitted into the neuron, it is processed, and an output is generated.

Here is a diagram that shows the structure of a simple neural network:

And, the best way to understand how neural networks work is to learn how to build one from scratch (without using any library).

In this article, we’ll demonstrate how to use the Python programming language to create a simple neural network.

The problem

Here is a table that shows the problem.

| Input | Output | |||

| Training data 1 | 0 | 0 | 1 | 0 |

| Training data 2 | 1 | 1 | 1 | 1 |

| Training data 3 | 1 | 0 | 1 | 1 |

| Training data 4 | 0 | 1 | 1 | 0 |

| New Situation | 1 | 0 | 0 | ? |

We are going to train the neural network such that it can predict the correct output value when provided with a new set of data.

As you can see on the table, the value of the output is always equal to the first value in the input section. Therefore, we expect the value of the output (?) to be 1.

Let’s see if we can use some Python code to give the same result (You can peruse the code for this project at the end of this article before continuing with the reading).

Creating a NeuralNetwork Class

We’ll create a NeuralNetwork class in Python to train the neuron to give an accurate prediction. The class will also have other helper functions.

Even though we’ll not use a neural network library for this simple neural network example, we’ll import the numpy library to assist with the calculations.

The library comes with the following four important methods:

- exp—for generating the natural exponential

- array—for generating a matrix

- dot—for multiplying matrices

- random—for generating random numbers. Note that we’ll seed the random numbers to ensure their efficient distribution.

- Applying the Sigmoid function

We’ll use the Sigmoid function, which draws a characteristic “S”-shaped curve, as an activation function to the neural network.

This function can map any value to a value from 0 to 1. It will assist us to normalize the weighted sum of the inputs.

Thereafter, we’ll create the derivative of the Sigmoid function to help in computing the essential adjustments to the weights.

The output of a Sigmoid function can be employed to generate its derivative. For example, if the output variable is “x”, then its derivative will be x * (1-x).

- Training the model

This is the stage where we’ll teach the neural network to make an accurate prediction. Every input will have a weight—either positive or negative.

This implies that an input having a big number of positive weight or a big number of negative weight will influence the resulting output more.

Remember that we initially began by allocating every weight to a random number.

Online Neural Network Example

Here is the procedure for the training process we used in this neural network example problem:

- We took the inputs from the training dataset, performed some adjustments based on their weights, and siphoned them via a method that computed the output of the ANN.

- We computed the back-propagated error rate. In this case, it is the difference between neuron’s predicted output and the expected output of the training dataset.

- Based on the extent of the error got, we performed some minor weight adjustments using the Error Weighted Derivative formula.

- We iterated this process an arbitrary number of 15,000 times. In every iteration, the whole training set is processed simultaneously.

We used the “.T” function for transposing the matrix from horizontal position to vertical position. Therefore, the numbers will be stored this way:

Ultimately, the weights of the neuron will be optimized for the provided training data. Consequently, if the neuron is made to think about a new situation, which is the same as the previous one, it could make an accurate prediction. This is how back-propagation takes place.

Wrapping up

Neural Network C++ Code Example

Finally, we initialized the NeuralNetwork class and ran the code.

Here is the entire code for this how to make a neural network in Python project:

Here is the output for running the code:

We managed to create a simple neural network.

The neuron began by allocating itself some random weights. Thereafter, it trained itself using the training examples.

Consequently, if it was presented with a new situation [1,0,0], it gave the value of 0.9999584.

You remember that the correct answer we wanted was 1?

Then, that’s very close—considering that the Sigmoid function outputs values between 0 and 1.

Of course, we only used one neuron network to carry out the simple task. What if we connected several thousands of these artificial neural networks together? Could we possibly mimic how the human mind works 100%?

Do you have any questions or comments?

Please provide them below.

Bio: Dr. Michael J. Garbade is the founder and CEO of Los Angeles-based blockchain education company LiveEdu . It’s the world’s leading platform that equips people with practical skills on creating complete products in future technological fields, including machine learning.

Related:

Artificial neural networks are statistical learning models, inspired by biological neural networks (central nervous systems, such as the brain), that are used in machine learning. These networks are represented as systems of interconnected “neurons”, which send messages to each other. The connections within the network can be systematically adjusted based on inputs and outputs, making them ideal for supervised learning.

Neural networks can be intimidating, especially for people with little experience in machine learning and cognitive science! However, through code, this tutorial will explain how neural networks operate. By the end, you will know how to build your own flexible, learning network, similar to Mind.

The only prerequisites are having a basic understanding of JavaScript, high-school Calculus, and simple matrix operations. Other than that, you don’t need to know anything. Have fun!

Understanding the Mind

A neural network is a collection of “neurons” with “synapses” connecting them. The collection is organized into three main parts: the input layer, the hidden layer, and the output layer. Note that you can have n hidden layers, with the term “deep” learning implying multiple hidden layers.

Screenshot taken from this great introductory video, which trains a neural network to predict a test score based on hours spent studying and sleeping the night before.

Hidden layers are necessary when the neural network has to make sense of something really complicated, contextual, or non obvious, like image recognition. The term “deep” learning came from having many hidden layers. These layers are known as “hidden”, since they are not visible as a network output. Read more about hidden layers here and here.

The circles represent neurons and lines represent synapses. Synapses take the input and multiply it by a “weight” (the “strength” of the input in determining the output). Neurons add the outputs from all synapses and apply an activation function.

Training a neural network basically means calibrating all of the “weights” by repeating two key steps, forward propagation and back propagation.

Since neural networks are great for regression, the best input data are numbers (as opposed to discrete values, like colors or movie genres, whose data is better for statistical classification models). The output data will be a number within a range like 0 and 1 (this ultimately depends on the activation function—more on this below).

In forward propagation, we apply a set of weights to the input data and calculate an output. For the first forward propagation, the set of weights is selected randomly.

In back propagation, we measure the margin of error of the output and adjust the weights accordingly to decrease the error.

Neural networks repeat both forward and back propagation until the weights are calibrated to accurately predict an output.

Next, we’ll walk through a simple example of training a neural network to function as an “Exclusive or” (“XOR”) operation to illustrate each step in the training process.

Forward Propagation

Note that all calculations will show figures truncated to the thousandths place.

The XOR function can be represented by the mapping of the below inputs and outputs, which we’ll use as training data. It should provide a correct output given any input acceptable by the XOR function.

Let’s use the last row from the above table, (1, 1) => 0, to demonstrate forward propagation:

Note that we use a single hidden layer with only three neurons for this example.

We now assign weights to all of the synapses. Note that these weights are selected randomly (based on Gaussian distribution) since it is the first time we’re forward propagating. The initial weights will be between 0 and 1, but note that the final weights don’t need to be.

We sum the product of the inputs with their corresponding set of weights to arrive at the first values for the hidden layer. You can think of the weights as measures of influence the input nodes have on the output.

We put these sums smaller in the circle, because they’re not the final value:

To get the final value, we apply the activation function to the hidden layer sums. The purpose of the activation function is to transform the input signal into an output signal and are necessary for neural networks to model complex non-linear patterns that simpler models might miss.

There are many types of activation functions—linear, sigmoid, hyperbolic tangent, even step-wise. To be honest, I don’t know why one function is better than another.

Table taken from this paper.

For our example, let’s use the sigmoid function for activation. The sigmoid function looks like this, graphically:

And applying S(x) to the three hidden layer sums, we get:

Neural Network Algorithm Example

We add that to our neural network as hidden layer results:

Then, we sum the product of the hidden layer results with the second set of weights (also determined at random the first time around) to determine the output sum.

..finally we apply the activation function to get the final output result.

This is our full diagram:

Because we used a random set of initial weights, the value of the output neuron is off the mark; in this case by +0.77 (since the target is 0). If we stopped here, this set of weights would be a great neural network for inaccurately representing the XOR operation.

Let’s fix that by using back propagation to adjust the weights to improve the network!

Back Propagation

To improve our model, we first have to quantify just how wrong our predictions are. Then, we adjust the weights accordingly so that the margin of errors are decreased.

Similar to forward propagation, back propagation calculations occur at each “layer”. We begin by changing the weights between the hidden layer and the output layer.

Calculating the incremental change to these weights happens in two steps: 1) we find the margin of error of the output result (what we get after applying the activation function) to back out the necessary change in the output sum (we call this delta output sum) and 2) we extract the change in weights by multiplying delta output sum by the hidden layer results.

The output sum margin of error is the target output result minus the calculated output result:

And doing the math:

To calculate the necessary change in the output sum, or delta output sum, we take the derivative of the activation function and apply it to the output sum. In our example, the activation function is the sigmoid function.

To refresh your memory, the activation function, sigmoid, takes the sum and returns the result:

So the derivative of sigmoid, also known as sigmoid prime, will give us the rate of change (or “slope”) of the activation function at the output sum:

Since the output sum margin of error is the difference in the result, we can simply multiply that with the rate of change to give us the delta output sum:

Conceptually, this means that the change in the output sum is the same as the sigmoid prime of the output result. Doing the actual math, we get:

Here is a graph of the Sigmoid function to give you an idea of how we are using the derivative to move the input towards the right direction. Note that this graph is not to scale.

Now that we have the proposed change in the output layer sum (-0.13), let’s use this in the derivative of the output sum function to determine the new change in weights.

As a reminder, the mathematical definition of the output sum is the product of the hidden layer result and the weights between the hidden and output layer:

The derivative of the output sum is:

..which can also be represented as:

This relationship suggests that a greater change in output sum yields a greater change in the weights; input neurons with the biggest contribution (higher weight to output neuron) should experience more change in the connecting synapse.

Let’s do the math:

To determine the change in the weights between the input and hidden layers, we perform the similar, but notably different, set of calculations. Note that in the following calculations, we use the initial weights instead of the recently adjusted weights from the first part of the backward propagation.

Remember that the relationship between the hidden result, the weights between the hidden and output layer, and the output sum is:

Instead of deriving for output sum, let’s derive for hidden result as a function of output sum to ultimately find out delta hidden sum:

Also, remember that the change in the hidden result can also be defined as:

Let’s multiply both sides by sigmoid prime of the hidden sum:

All of the pieces in the above equation can be calculated, so we can determine the delta hidden sum:

Once we get the delta hidden sum, we calculate the change in weights between the input and hidden layer by dividing it with the input data, (1, 1). The input data here is equivalent to the hidden results in the earlier back propagation process to determine the change in the hidden-to-output weights. Here is the derivation of that relationship, similar to the one before:

Let’s do the math:

Here are the new weights, right next to the initial random starting weights as comparison:

Once we arrive at the adjusted weights, we start again with forward propagation. When training a neural network, it is common to repeat both these processes thousands of times (by default, Mind iterates 10,000 times).

And doing a quick forward propagation, we can see that the final output here is a little closer to the expected output:

Through just one iteration of forward and back propagation, we’ve already improved the network!!

Check out this short video for a great explanation of identifying global minima in a cost function as a way to determine necessary weight changes.

If you enjoyed learning about how neural networks work, check out Part Two of this post to learn how to build your own neural network.